When deploying workloads to the public cloud, the question arises ‘how do you monitor them’? This then leads to further questions such as:

When deploying workloads to the public cloud, the question arises ‘how do you monitor them’? This then leads to further questions such as:

- Will my existing monitoring solution support Microsoft Azure workloads such as PaaS?

- Do I need to purchase extra licenses or upgrade my existing licenses?

- Do I need to have two different monitoring solutions? One for on-premises and one for the public cloud?

Each of the above questions then leads to a myriad of further questions around the deployment mechanism, how data is collected, stored and displayed. How are you altered of issues or potential issues? How do you capacity plan for resources in the cloud? How do you monitoring specific application workloads?

To answer these questions and more Microsoft released Operations Management Suite which became generally available in January 2016.

What is Operations Management Suite?

Operations Management Suite is ‘Management as a Service’ or MaaS for short. It runs in Microsoft Azure and can provide visibility into your on-premises and Microsoft Azure based workloads, providing a consistent monitoring approach across datacentres.

OMS is broken down into four key components which at a high level are:

- Insight and Analytics to collect, correlate, search and act on log and performance data generated by operating systems and applications. Providing real time analysis of information and potential issues.

- Automation & Control which enables a consistent approach to control and appliance by leveraging desired state configuration, change tracking and update management.

- Security and Compliance focuses on identifying, assessing and mitigate risks to infrastructure. Collecting and analysing security events to identify suspicious activity.

- Protection and Recovery to provide analysis and status updates of Azure Backup and Azure Site Recovery

The diagram below depicts a logical overview of the proposed Operations Management Suite environment.

Note: At the time of writing OMS supports Azure Backup and Site Recovery in Classic Mode.

Operations Management Suite Components

The components of Operations Management Suite are broken down into three areas, agent, dashboard and solution packs.

- Agent is an in-guest service which can be pushed out automatically using Group Policy, System Center Configuration Manager or another deployment method. It is used to provide heartbeats and data back to the centralised Operations Management data repository

- Dashboard is the Operations Management Suite portal which runs in a browser. The dashboard can be customised with graphical views of valuable searches and solutions

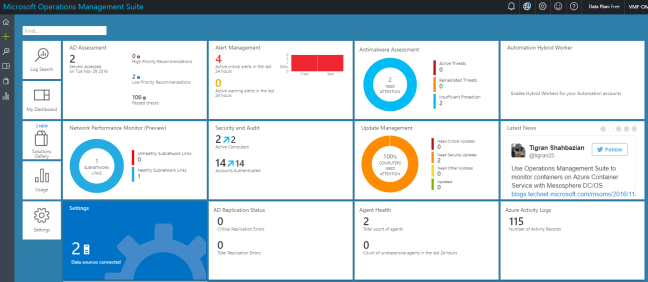

- Solution Packs are add-on services which add functionality and provide in-depth analysis of collected data. Examples of commonly deployed Solution Packs are:

- Malware Assessment which provides status of antivirus and antimalware scans across servers

- Change tracking with tracks configuration changes across servers

- System Update Assessment which identifies missing system updates across servers

- AD Replication Status which identifies Active Directory replication issues

- SQL Assessment which assesses the risk and health of SQL Server environments

- AD Assessment which asses the risk and health of Active Directory environment

Microsoft are continuously updating Solution Packs and a few which are in public preview are listed below:

- Azure Networking Analytics which enables you to gain insight into Network Security Groups and Application Gateway logs

- Capacity and Performance which enables you to view Hyper-V CPU, memory and storage utilisation

- Office 365 which provides visibility into user activities as well as forensics for audit and compliance purposes

- Network Performance Monitoring which offers real time monitoring of parameters such as loss and latency

- System Centre Operations Manager Assessment which asses the risk of your SCOM environment

- VMWare Monitoring provides the ability to explore ESXi Host logs for monitoring, deep analysis and trending

The graphic below provides an example Operations Management Suite dashboard.

Workspaces

OMS uses the concept of workspaces which is primarily an administrative boundary but is also used to collect data within an Azure region. Workspaces can be used to delegate responsibility to individual users or groups who undertake specific roles e.g. Network Team access to Network Performance Monitor.

It should be noted that workspaces are independent of each other and that data collected from each workspace cannot be viewed in another workspace. However you can link multiple workspaces to a single Microsoft Azure subscription.

Workspaces also enable the use of different license plans, for example in one workspace you might use the System Center Add On and another workspace you might use Insight & Analytics.

Data Collection

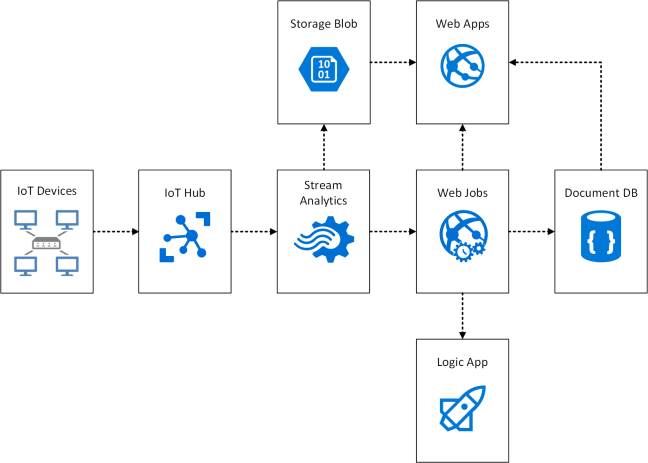

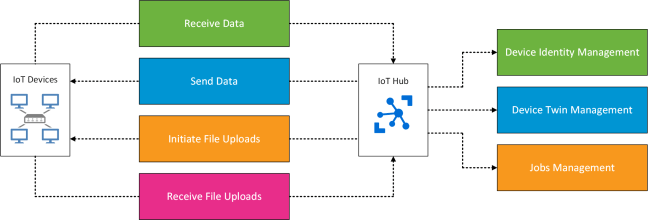

Operations Management Suite collects data on a real time basis using either in-guest agents installed on Windows or Linux, a System Center Operations Management Group which uses the SCOM management servers to forward events and performance data to Log Analytics or finally an Azure Storage Account that collects data from PaaS and IaaS services.

- Logging which is data generated by the operating system or application such as event logs, IIS logs, syslogs or custom logs in the form of text files.

- Performance which uses the Windows or Linux performance counters to collect data such as memory, processor and disk information

- Solution specific items which provide in-depth analysis of application items

A logical overview of data collection is shown below.

Licensing

OMS can be licensed either on a pay as you go basis or on a subscription basis. You are given the choice of licensing all OMS components together as a ‘suite’ which makes the overall cost cheaper or you can pick which components you need.

- Licenses are based on nodes, a node is defined as a physical computer, virtual machine or network device

- Node charges are hourly and nodes that only report for a part of a month are proratared

- Each node can produce up to 500MB of data per day without incurring any extra charges

- OMS data retention is currently set to one month, plans to expand this to two years are in the pipeline

Final Thought

OMS is maturing as a product and integration points to on-premises environment is evolving. The ability to provide a centralised dashboard with application or vendor specific solution packs will make the product more appealing. Watch this space!

According to the

According to the

It has been widely reported that data growth is increasing year on year with more data being created in the past two years than in our entire history before this point.

It has been widely reported that data growth is increasing year on year with more data being created in the past two years than in our entire history before this point.