Backups are really important when it comes to returning service after an issue or for meeting compliance or regulatory requirements. The inability to recover from loss of data can make a business bankrupt in a short space of time.

You might say that having your data in the cloud which is highly available makes backups someone else’s problem. Well that isn’t correct, what happens if you have data corruption or failure of an service or application after an update or perhaps a virus? Having two copies of the data just means you have two corrupted copies!

We need to be able to go back in time to recover from an unplanned event. This is where Microsoft Azure Backup steps into the ring!

What Is Azure Backup?

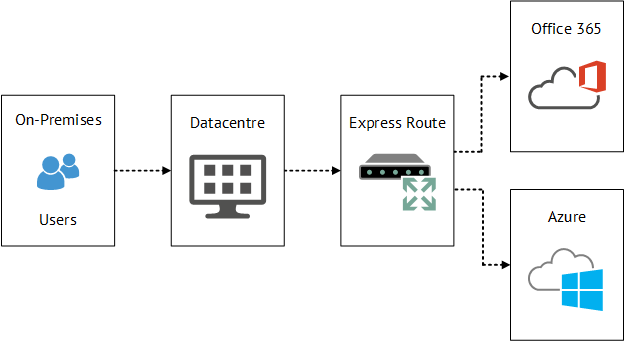

In a nutshell Azure Backup enables you to backup on-premises or Azure virtual machines using your Azure subscription. This may sound a bit bizarre but until recently there was no supported way to backup Azure virtual machines.

When you initially create your Azure Backup Vault you are able to decide if you want your backups to be locally or geo redundant.

Backing Up Azure Virtual Machines

Backing up Azure virtual machines is fairly straight forward, it’s a three step process which is intuitive in the Azure Backup blade.

- Discover the virtual machines you want to backup

- Apply a backup policy to the virtual machines

- Backup the virtual machines

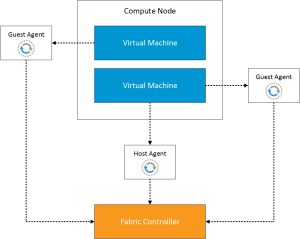

Each VM that you want to protect uses the Azure VM agent to co-ordinate backup tasks. The best way to think of the Azure VM agent is an extension within the virtual machine. When a backup is triggered the Azure VM agent leverages VSS to take a point in time snapshot of the VM. This data is then transferred to the Azure Backup Vault.

Few things I should call out which are limitations of the current version of Azure Backup, these are:

- Backing up V2 Azure Virtual Machines is not supported (created by Resource Manager)

- Unable to backup VM’s with Premium storage

- On restore you have to delete the original VM and then restore it

- Restoring VM’s with multiple NICs or that perform Domain Controller roles is only available via PowerShell

Backing Up On-Premises Virtual Machines

When Microsoft do things, they don’t like to mess about. What do I mean? Well they are giving you an enterprise backup solution for the cost of an Azure Storage Account!

They don’t even stop at that, they enable you to back up your on-premises virtual machines to a disk first and then if you want backup to your Azure Vault after this.

Essentially, it’s Data Protection Manager, but with some of the functionality removed. After creating an Azure Backup Vault you are entitled to download and install the Azure Backup Server software too an on-premises server. To push out the DPM Agent to virtual machines you need to enter an account that has local administrators rights over these VM’s.

So what functionality is missing?

- Azure Backup Server supports 50 on-premises virtual machines, so a new Azure Backup Vault is required with new vault credentials. This intern means an on-premises Azure backup Server

- Azure Backup Server does not support tape, instead it uses Azure Backup Vault for archiving

- Azure Backup Server does not allow you to manage multiple Azure Backup Servers from a single console (think 200 VM’s being backed up you would have to login to at four consoles)

The supported operating systems are shown in the table below. Note that you can only backup to disk with the client OS as these are unsupported in Azure.

| Operating System |

Platform |

SKU |

| Windows 8 and latest SPs |

64 bit |

Enterprise, Pro |

| Windows 7 and latest SPs |

64 bit |

Ultimate, Enterprise, Professional, Home Premium, Home Basic, Starter |

| Windows 8.1 and latest SPs |

64 bit |

Enterprise, Pro |

| Windows 10 |

64 bit |

Enterprise, Pro, Home |

| Windows Server 2012 R2 and latest SPs |

64 bit |

Standard, Datacenter, Foundation |

| Windows Server 2012 and latest SPs |

64 bit |

Datacenter, Foundation, Standard |

| Windows Storage Server 2012 R2 and latest SPs |

64 bit |

Standard, Workgroup |

| Windows Storage Server 2012 and latest SPs |

64 bit |

Standard, Workgroup |

| Windows Server 2012 R2 and latest SPs |

64 bit |

Essential |

| Windows Server 2008 R2 SP1 |

64 bit |

Standard, Enterprise, Datacenter, Foundation |

| Windows Server 2008 SP2 |

64 bit |

Standard, Enterprise, Datacenter, Foundation |

Final Thoughts

So why are Microsoft doing this? My thoughts are they want customers to start using Azure storage to replace on-premises tapes. For those who are used to DPM, this could be a natural extension to your existing backup policy.