One of my work colleagues Mat Smith pointed out that when you install the generic ESXi hypervisor from the VMware site you get basic HP or Dell hardware information which is OK, but if you only have local storage you don’t know what state the underlying RAID configuration is in unless you have access to iLO or DRAC.

This can be easily rectified by downloading the latest HP Custom Image for ESXi 5.1.0 ISO at the time of writing this blog post, the latest update is VMware-ESXi-5.1.0-799733-HP-5.34.23.iso

Once you have downloaded the ISO go into Update Manager > Admin View > ESXi Images and Select Import ESXi Image

Select your the HP Custom Image for ESXi 5.1.0 ISO and click Next

It should only take a minute or so and you will see the HP Custom Image for ESXi 5.1.0 ISO has been uploaded. Once done hit next.

Next we need to create a a baseline image, I’m going to roll with HP Custom Image ESXi 5.1.0 then click Finish

Fingers crossed you should see the Imported Image

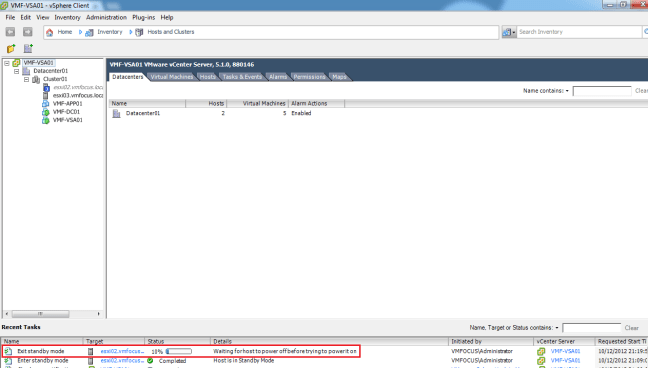

Next we are going to go the Hosts and Clusters View and select the Update Manager Tab and then select Attach

Select you Upgrade Baseline image and click Attach

Next select Scan & choose Upgrades and then select Scan again

Suprisingly enough after the scan completes you will notice that your ESXi Hosts are no longer compliant

I tend to perform Baseline Upgrades on ESXi Hosts individual, rather than at Cluster level, just in case anything goes wrong. With this in mind, go to your first ESXi Host and Select Remediate

Select Upgrade Baseline and choose HP Custom Iage ESXi 5.1.0 and hit Next

Accept the EULA and hit Next

Woah, whats this message? ‘Remove installed third party software that is incompatible with the upgrade, and continue with remediation? Word of warning you might want to check with your IT team to make sure that you aren’t going to lose any functionality.

Enter a Task Name & Description and hit Next

On the Host Remediation Options, make sure you tick ‘Disable any removable media devices connected to the virtual machines on the host’ as we don’t want an attached ISO to be the cause of our failure. When you are ready hit Next

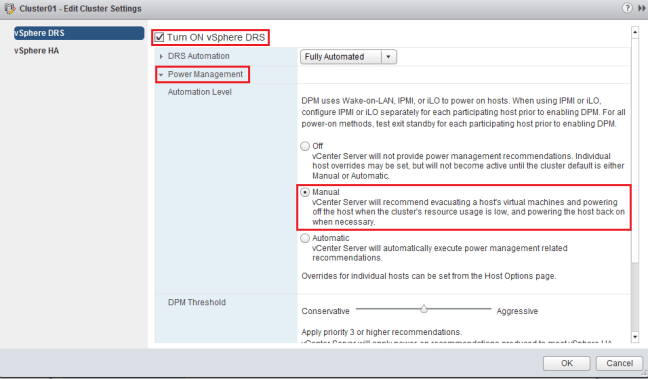

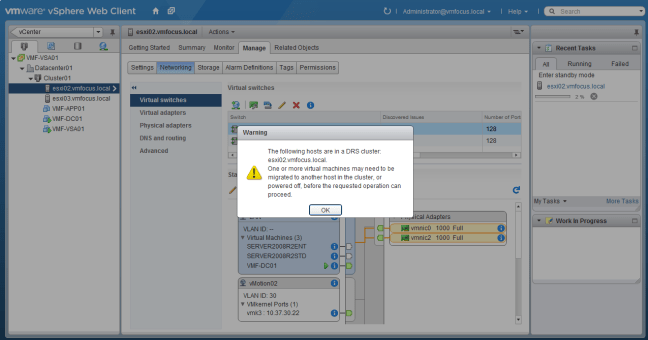

On the Cluster Remediation Options, I tend to make sure that DPM is disabled and also Admission Control so that the ESXi Host can actually be patched. Then click Next

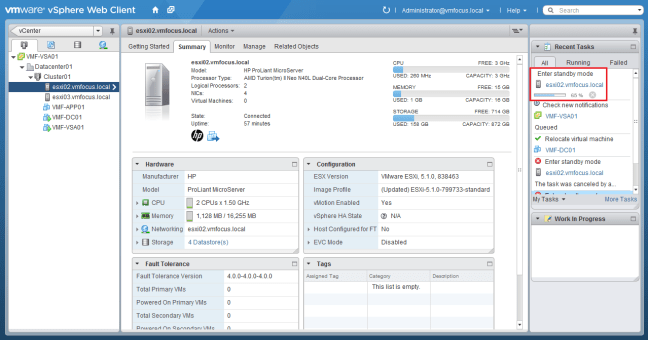

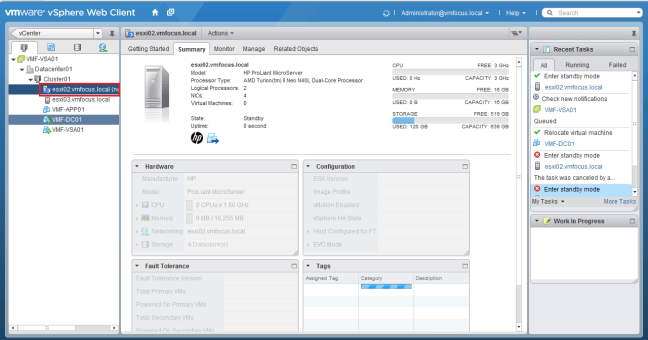

Once you are happy with your Upgrade Baseline, click Finish. Time to go and make a brew as this is going to take along time!

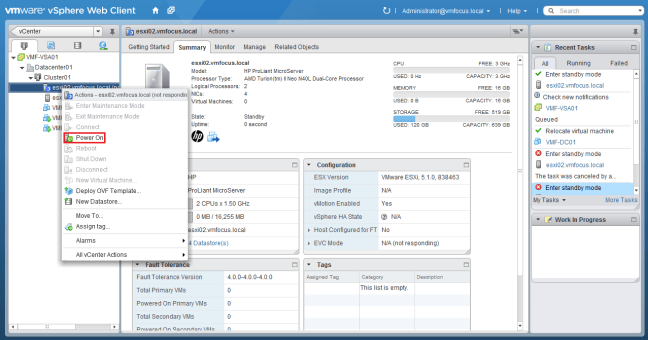

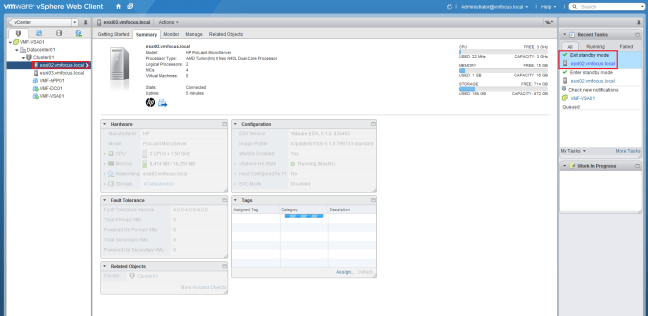

Awesome now that’s completed, we can see the Local Storage on the ESXi Host.

Rinse and repeat for the rest of your ESXi Hosts.